Table of contents

- Introduction

- 1. Certification

- 2. HyperText Markup Language (HTML)

- 3. Cascading Style Sheets (CSS)

- 4. Static Website

- 5. HyperText Transfer Protocol Secure (HTTPS)

- 6. Domain Name Service (DNS)

- 7. JavaScript

- 8. Database

- 9. Application Programming Interface (API)

- 10. C

- 11. Tests

- 12. Infrastructure-as-Code (IaC)

- 13. Source Control

- 14. Continuous Integration/Continuous Deployment (CI/CD) - Back End

- 15. CI/CD - Front End

- 16. Blog Post

- Conclusion

Introduction

To continue my professional development I began a search for a project that would increase my knowledge of Cloud Computing. Project-based learning was the driving factor that led me to find the Cloud Resume Challenge and escape the sticky world of video tutorials and generic to-do list projects.

The Cloud Resume Challenge is a project made to help participants gain hands-on experience working in the Cloud. Included in this project are core skills for working in modern Cloud development and management including Networking, Serverless Computing, Infrastructure-as-Code, Source Control, and Continuous Integration/Continuous Deployment (CI/CD) pipelines. There are instruction sets for each of the three major Cloud providers, Amazon Web Services (AWS), Google Cloud Provider (GCP), and Microsoft Azure. I selected Azure as it is the Cloud provider I am familiar with from my work. Throughout the project, I provisioned and configured several Azure resources including:

Azure Front Door and CDN Profile

Azure Endpoint

DNS Zone

Storage Account

- Table Storage

Azure Key Vault

App Service Plan

Azure Function

It was an intensely valuable experience for me to complete this project. Over a month I learned how much I didn't know about working in the cloud despite some overconfidence at the outset of the challenge. After all this effort I am proud to share https://joshuacrawford.dev/ and my experience completing all the steps in this project.

1. Certification

As part of the challenge, the first item was to become certified in the cloud provider you are completing the challenge in. With that, I studied for and passed the AZ-900 Azure Fundamentals certification. I believe this was the source of my overconfidence as I began the challenge. After completing the certification I was able to speak about the cloud, but the issue was working in the cloud. Luckily, that is exactly what this challenge was meant to address.

2. HyperText Markup Language (HTML)

HTML is the standardized system for tagging documents to be displayed as a webpage. This standardization gives meaning and structure to the websites we visit every day.

Throughout my schooling and career, I had already been exposed to working with HTML so this part of the challenge and the CSS section that followed was a nice brush-up on some of the skills I have not used since starting my back end focused position.

Despite this familiarity, it did present some challenges. I had to ask myself how I wanted my resume formatted, and how to best present the information that employers would see when they viewed my resume.

I am a huge fan of material design and was happy to find and use Google's Material Design Lite (MDL) to provide a structure to my resume site. After leveraging this tool I was able to make quick work of my resume website's formatting.

3. Cascading Style Sheets (CSS)

CSS is a style sheet language that provides a way to change the presentation of an HTML webpage. It is capable of manipulating the appearance and functionality of entire web pages and individual HTML elements.

As mentioned this was a tool that I had some experience with in the past but is admittedly not one I am skilled with. Aside from floating elements and the occasional cursor change I relied on MDL to take care of most of the appearance. I did learn some tenets of design thanks to this step, specifically making sure that all interactive elements change the cursor from an arrow to a pointer and making sure that the content of the page was padded away from the edge of the screen.

4. Static Website

A static website is a website that contains individual HTML documents. These webpages must be edited individually even if they have overlapping pieces like a header or footer. This is in contrast to dynamic webpages which are pieced together from several different documents to form a cohesive page with the use of a server technology when a user visits the web page.

This was the first step that involved working in and setting up resources in Azure. To do this I made a storage account that was set up to hold a static webpage and uploaded my index.html file. It was at this point that I was confronted with thinking I knew what to do in the cloud but did not. Luckily there is plenty of documentation and resources provided by Microsoft and other developers that made getting the storage account set up a simpler process.

5. HyperText Transfer Protocol Secure (HTTPS)

HTTPS is a web protocol for securely transferring documents from a web server to a web browser through encryption. There is also HyperText Transfer Protocol (HTTP) which is an application layer protocol with the same function, without encryption.

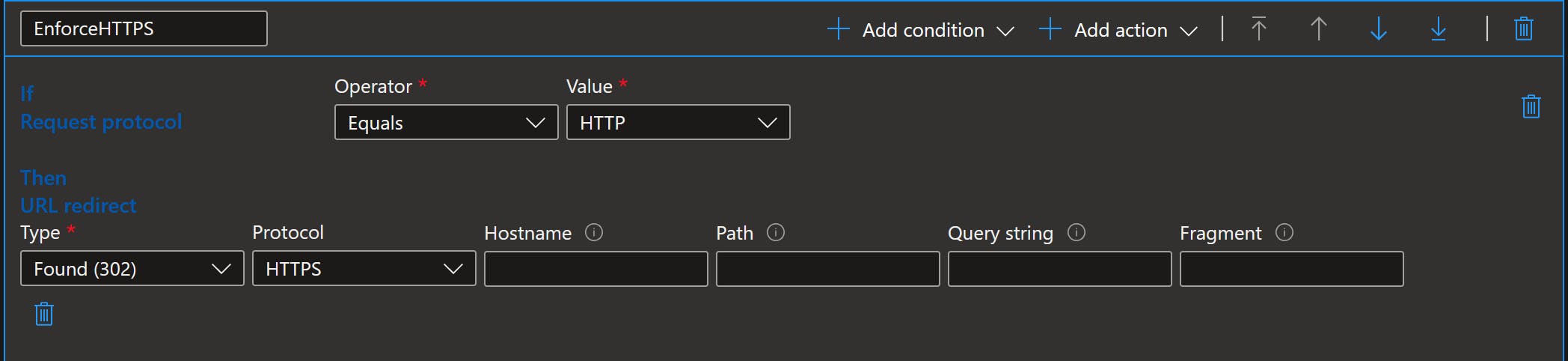

In the setup of the Static Webpage, an endpoint was created to allow the webpage to be accessed from the web. I was happy to see that this step was included in the challenge after reading up on pushing security protection to the left in the Software Development Life Cycle (SDLC). An encrypted transmission ensures my document is being sent to any potential employers as I intended. This was a simple solution in Azure by configuring the endpoint's rules engine to redirect to HTTPS whenever a request is received.

6. Domain Name Service (DNS)

DNS is commonly described as the "phone book of the Internet". It maps the Internet Protocol (IP) address of a webpage to be associated with the domain name. This makes it so we don't have to remember 151.101.193.164 and instead can just type nytimes.com into our browser and be directed to the right page.

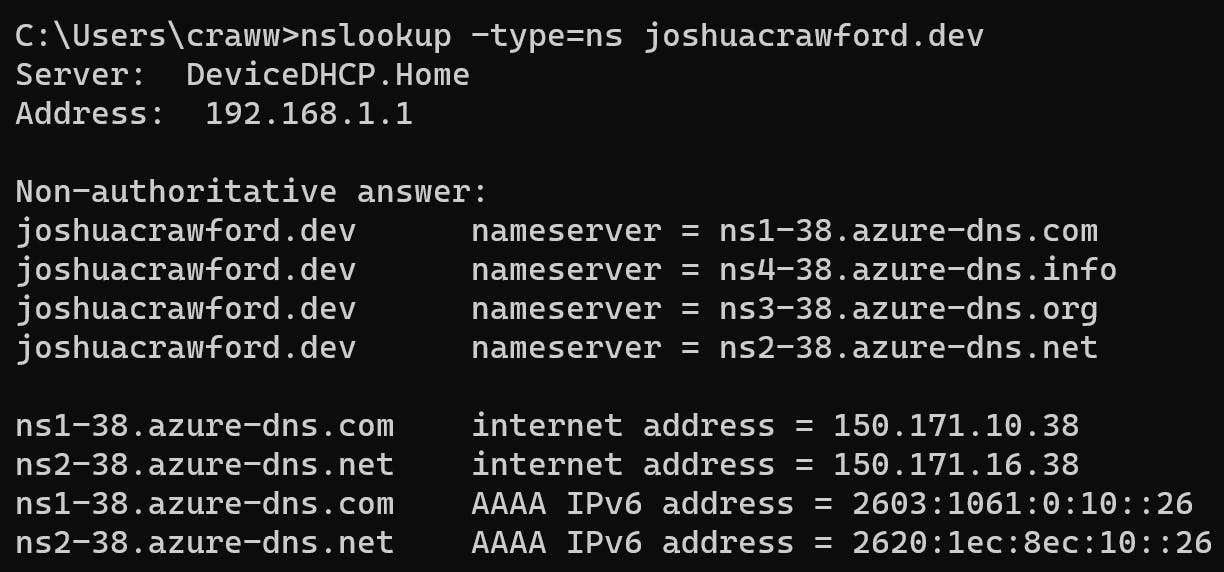

To do this in Azure required the provisioning of an Azure Front Door and CDN Profile to allow access to my static webpage. This endpoint was initially a generic endpoint for Azure web hosting with the .azureedge.net domain name. I purchased joshuacrawford.dev from Google domains. To get this endpoint to work I had to map name servers from the Google-hosted servers to my Azure-hosted name servers. It took time and a few tries to make sure the name servers were inserted properly but with the help of the nslookup command I was able to confirm that the name servers were transferred to Azure properly.

Another difficulty that had to be corrected was that once the name servers were set up properly the domain required www. to be prepended to the domain name to direct properly. Azure allows for Transport Layer Security (TLS) certificates to be placed on custom domains but not on the apex domain (that is, without www. before the domain name). This was the source of why typing www. joshuacrawford.dev functioned properly while just joshuacrawford.dev did not, especially with HTTPS. To correct for the apex domain needing its own TLS certificate I was able to leverage certbot from the Electronic Frontier Foundation to generate a certificate for the apex domain and allow https://joshuacrawford.dev/ to work properly. This allowed me to create a free TLS certificate with the only downside being the lack of automatic renewal.

To get the certificate applied properly it required an Azure Key Vault to be provisioned and the generated certificate stored inside. From there the apex domain can use the certificate stored in the key vault to enable HTTPS and remove the need for www. to be added before the domain.

7. JavaScript

JavaScript is used to make webpages interactive with the user. This makes webpages more dynamic and enjoyable to use and is the third of the core technologies of the World Wide Web along with HTML and CSS.

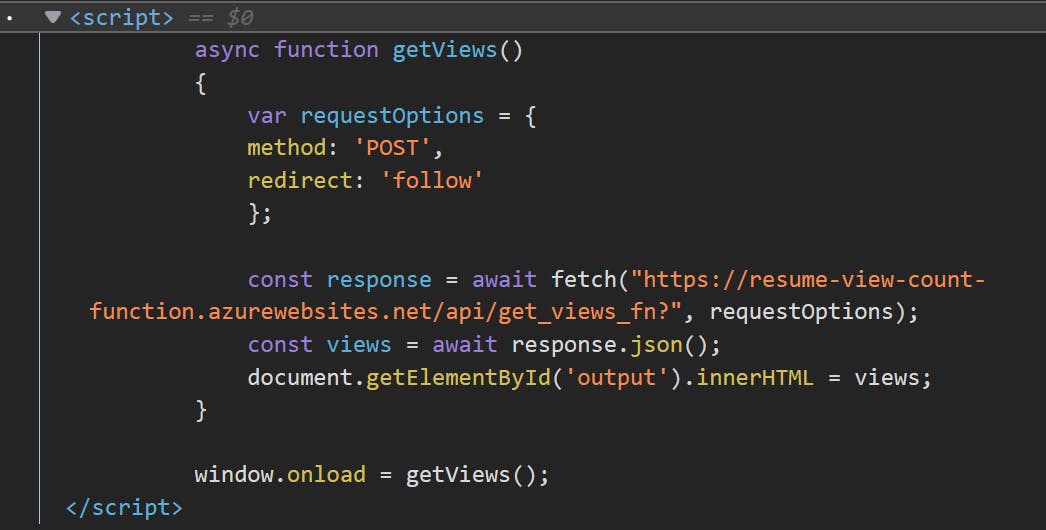

The need for JavaScript on the webpage is to allow it to make contact with my API. Upon visiting the webpage a POST request is sent to the API, gets the current view count, increments it by one, and returns it to the webpage to be presented. Previously I have had experience with the Angular development framework which is built on TypeScript and handles the output of an API response differently. Luckily, all that was needed to handle this in vanilla JavaScript was to map the output to a tag with a custom ID in the sidebar.

8. Database

Originally I had planned to try to use Azures CosmosDB, a document-based database management system. Instead, I chose to use the challenge's recommended Table Storage system built into the storage account used to hold the static webpage. This allowed a simple key/attribute relationship for accessing the view count. Since I am only working with a single value it made sense to leverage table storage and tackle CosmosDB in a later project.

9. Application Programming Interface (API)

An API provides a way for two or more programs to interact with each other without direct communication. For the challenge, the static webpage is contacting table storage through the API to access, update, and return the number of viewers on the page.

While I have created APIs in the past, primarily for database access, I did not have experience constructing an API with serverless technologies like Azure Functions (equivalent to AWS lambdas). By creating the Azure Function with an HTTP trigger the code can be executed with a POST request from the javascript embedded in the static web page and allow a return value to be sent to the calling webpage.

Cross-Origin Resource Sharing (CORS) has been a headache for me in the past when developing an API. With Azure functions there it is a very simple process of going into the API settings and adding the domain that you want added to the allowlist.

10. C

The challenge recommends Python for this step. Despite this, I chose to use C# which I'm familiar with from my day-to-day job. For this level of implementation, I wanted to use Microsoft's .NET framework to interact with their cloud. I plan to pursue using Python for my next project which will have a more complicated set of functions and data structures to be used.

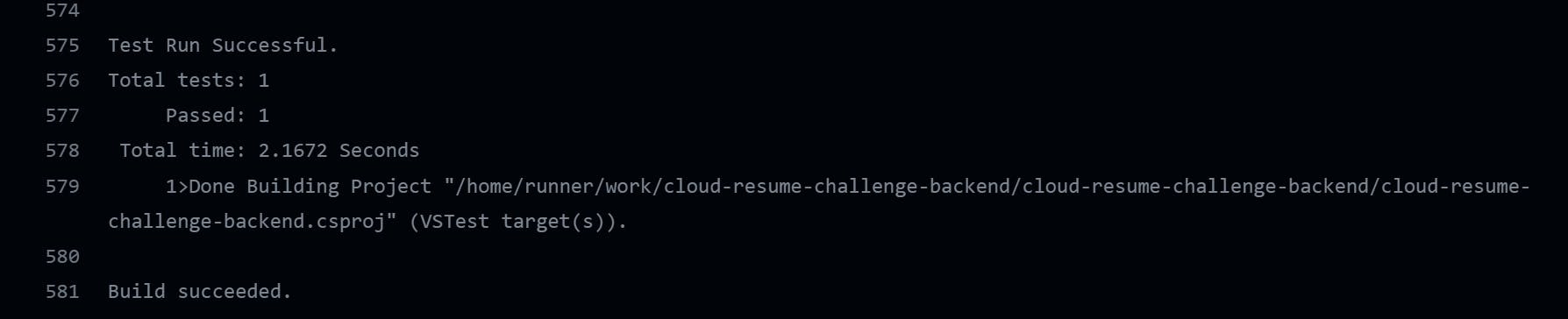

11. Tests

Test Driven development is a major topic in the industry with my first experience being in my full-time position. Looking back I wish I had made this test before working on the actual function's code. Using Visual Studio Code's built-in unit testing suite I was able to set up, test, and clean up the view count function to ensure it was functioning before deployment. This gave a large reassurance on each push of the code.

Later in the project as I tried to set up CI/CD pipelines this test would cause issues for me. The primary issue was the retrieval of environment variables to get the connection string for table storage. I learned a hard lesson about switching between local and deployed testing environments. All the effort to make sure the test was operational was worth it for the satisfying feeling of seeing the test pass locally and in the repository.

12. Infrastructure-as-Code (IaC)

Infrastructure-as-Code offers a way for the structure of cloud deployments to be recorded in source control and allows uniformity in deployments across an organization. While the challenge recommends the use of Azure Resource Manager (ARM) templates for IaC, I wanted exposure to Terraform which is an open-source cloud agnostic declarative IaC language provided by Hashicorp.

There have been two projects I have worked on in my job, both of which relied heavily on referencing previous implementations by my team so it was nice to start from the beginning with an entirely new tool. Initially looking at an IaC file is daunting but working with Terraform has shown just how useful, and necessary having your infrastructure declared programmatically and stored in source code is.

It was at this step that I realized that provisioning a resource in the cloud is not a simple matter of selecting the resource and hitting the create button. A single resource, the function app, for example, required the creation of an App Service Plan, the function application, and the function inside of the application. Iac tools like Terraform make that clear, and hassle-free as they will not deploy without all the dependencies in place or declared previously.

Note: Terraform did not allow me to provision the function app service plan in the free or shared SKU. Because of this, I did have to pay about $30 in cloud fees. Luckily I was able to manually switch to the Free SKU inside Azure Portal without affecting performance. I have yet to find out why Terraform does not allow the Free SKU to be provisioned.

Terraform Files: cloud-resume-challenge-backend/Terraform at main · joshua-wayne-crawford/cloud-resume-challenge-backend (github.com)

13. Source Control

Source control allows the project to be maintained historically and ensures code can be easily subject to Software Engineering principles. I chose to use GitHub to store this and any future projects in my portfolio. Similarly to IaC I know there is an entire world of git from the command line that can be leveraged but as it stands I kept with standard commit and push cycles from inside Visual Studio Code.

Front End Repository: joshua-wayne-crawford/cloud-resume-challenge-frontend: All steps of the cloud resume challenge (github.com)

Back End Repository: joshua-wayne-crawford/cloud-resume-challenge-backend: backend of cloud resume challenge (github.com)

14. Continuous Integration/Continuous Deployment (CI/CD) - Back End

Continuous Integration and Continuous Deployment allow for a developer to push code and have it tested and deployed automatically. This allows agile teams to minimize downtime and complexity in delivering a product.

GitHub has a built-in tool, GitHub Actions, to allow the creation of CI/CD pipelines. Similarly to IaC, CI/CD has plenty of documentation and templates available to quickly get a pipeline up and running. Given a template to build and test the code in the back end, I simply needed to get the deployment step inserted. Because of GitHub Action's requirement for the prior step to succeed before the next step begins, I was able to guarantee that the test passed and the code was working prior to deployment into the cloud and functional to end users of my website.

15. CI/CD - Front End

Without any functional code, the pipeline for the Front End was much simpler. A template was available to get the HTML source code uploaded to my Azure environment. The only step that needed to be added was a purge of my CDN endpoint to allow changes to be presented immediately after deployment was successful.

This step showed the huge benefit of CI/CD as the steps to upload and purge the endpoint by hand are a large effort. With this pipeline, I am now able to make quick changes to wording and styling and have them available to anyone who visits my resume quickly.

16. Blog Post

You're reading it!

Conclusion

The Cloud Resume Challenge was a great way to put the knowledge I learned from earning my AZ-900 certification into practice. Cloud networking, CI/CD, and serverless APIs were all efforts I had little to no experience with so being able to work from the beginning has been an extremely rewarding effort. The genius I've found in this project is that it is my resume so I have a desire to keep it polished and up to date, instead of leaving this project as a footnote ironically, on my resume.

The challenge uses low-cost technologies, however, there was a cost associated with working in the cloud. The creators of the challenge have done a great job selecting tools that will not prohibit anyone else who wants to take the challenge from participating due to price.

Overall, I highly recommend anyone interested in pursuing this challenge to do so. I feel much more confident working in the Cloud and will be leveraging the knowledge I gained from this project in my next effort.